2025.06.05 - In The Lost Lands

Customer Story

Notes from Visual Effects studio Herne Hill, based in Toronto, Canada and their use of Ragdoll in the production of In the Lost Lands.

Written by:

Dominik Haase, Rigging/CFX SupervisorDave Sauro, Partner and EPPaul Wishart, Head of CGArthur Fornara, Lead Rigging/CFX TD

At Herne Hill, we adapted Ragdoll into our pipeline in late 2022.

This was primarily driven by our rigging/CFX department. One of the earliest projects in which we used it was In the Lost Lands. Below, we would like to give you some insights into our lessons learned and offer some tips for others who are looking to integrate Ragdoll into their studio pipeline.

Pipeline Perspective

At the core of our rigging pipeline is a proprietary API.

It contains utilities from file IO to OpenMaya wrappers, as well as tools like our rig builder. But instead of creating joints, IK handles and controls by hand - we compose a rig using the API in a build script. That build script handles everything, from loading the model to exporting the individual rig representations like main for our in-house anim team, FBX for crowds, or outsource for vendors. We call these scenes representations.

Our CFX pipeline runs entirely through Houdini, with the majority of setups being Vellum hair, cloth, and muscle simulations. The artists are mostly the same, as we believe that both disciplines are two sides of the same coin.

Before Ragdoll

Our pre-Ragdoll workflow for characters with clothing was fairly standard:

- Skinweight the cloth to the body joints and include a visibility switch.

- (Optionally) add one or two joints to let animators move cloth out of the way if necessary.

Generally, animators would just hide that geometry though. The downside to this was the animation output often not being representative of the final shot. Therefore we usually had to wait for CFX artists to run their cloth simulations before assessing the full performance.

The same goes for dynamic props interacting with characters, such as ropes or chains. Even with a flexible rig, animators would need lots of time and iterations to create physically plausible animations. Combine that with requests for CFX to enhance the dynamics by actually simulating the prop, and you end up in a big mess.

With Ragdoll we were hoping trying to achieve two things.

- Decrease the time to a first representative version of our shot

- Increase the quality of our animation outputs

Considerations

Who builds the puppets?

Whoever wants to! Some of our animators are eager to build Ragdoll puppets, so we let them. This is mostly for one-off shots. Having full access and control to the Ragdoll puppet enables workflows to animate with Ragdoll (recall the demo of finger interactions).

But not every animator wants to configure simulation settings. Since our riggers have experience setting up simulations (and build the rigs anyway), we let them do it. Through multiple iterations we arrived at a fairly automated workflow for building and managing the puppets which we'll discuss in more detail later.

Which tools had to be rewritten/changed to accommodate Ragdoll puppets?

For us, fortunately none. We already had the ability to publish arbitrary rig representations for our animators. And since Ragdoll outputs animation curves on rig controls, it's seamless from a tooling perspective.

What needed to change in the workflow?

- As Ragdoll works in the animation scene, controls for the desired motion have to exist in the first place. This meant adding joint chains to geometry that we would previously not rig but rely on vertex simulations for.

- We later learned that the way we modeled geometry, particularly cloth, had become increasingly important. And strategically adding or removing details from the model could have a great impact on the final look.

- Rigging/CFX created a small UI to manage their Ragdoll rigs. But nothing significant changed aside from running the simulation before submitting a new version for review.

- Most importantly, and this also leads to our biggest challenge still: managing the review process. So far, animation and CFX were distinct disciplines. If something needs to be dynamic, it's handled by CFX; if it's animated, it's animation. With Ragdoll, we added a dynamic component in animation, which required more consideration during reviews.

Even if the animated part of a character gets approved, the dynamic part may still need some iterations. This leads to conflict in the traditional, separated, and sequential pipeline because the dynamic portion of the shot happens during the animation step, and the shot can't proceed down the pipeline.

After Ragdoll

Like other tools, there are tasks at which Ragdoll is good and others at which it is not.

Key Insight

"Everything in need of art direction or dynamics without vertex accurate collisions gets a Ragdoll pass."

- Animating skirts, and sometimes coats, is easy and fast enough, so we include them early, which gives us a better idea of their shapes in the final shot.

- CFX for crowd simulations has been easier than ever using Ragdoll as we were able integrated the simulations into our cycles rather than doing the simulation as a post process. This is a nice alternative to our much more labor intensive post crowd simulation process.

- Horse tails, manes, and reins have been almost exclusively Ragdoll-powered since the beginning.

- Pretty much all ropes and chains (or alike) run through animation using Ragdoll.

- Ragdoll has given our animators a quick way to iterate on physically plausible ways of characters falling from cliffs.

When we started, we were cautious and passed the Ragdoll rig as a separate representation to our animators. Unfortunately, that also meant that we created an extra hurdle for those who didn't always want to swap rigs to run their simulations.

After some time (much more than we'd like to admit), we gained the confidence to include Ragdoll in our main rigs, giving every animator direct access. There has not been a single report of degraded performance or issues when all licenses were in use.

We also treat Ragdoll like any other software in our pipeline and lock the version for the duration of a project to prevent compatibility issues.

We have since also added collision puppets (Ragdoll rigs without dynamic components) for all rigs by default.

Challenges

I mentioned the review process earlier, but it's worth going into more detail.

Notes given on the dynamics of an animation version have to be addressed by the animator or the rigger. Since neither department has traditionally been responsible for dynamics, rigging and animation get approved with the problem being pushed downstream.

Both animation and simulation are iterative processes. With Ragdoll sitting somewhere in the middle of both disciplines, it needs to be treated accordingly. While Ragdoll can allow artists to quickly iterate versions, traditional workflows like Vellum are often preferred by supervisors for hero simulations due to the additional detail and accuracy.

That said, Ragdoll was not meant to replace Vellum (or any traditional CFX workflows). We intended to use it primarily for our mid and background characters, allowing CFX artists to spend more time on hero characters. Although we have used Ragdoll for characters fairly close to camera as well!

In this clip, we have characters simulated through Ragdoll (light blue) and Vellum (light green) side by side.

The following sections are from our Lead Rigging/CFX TD, who goes into more detail about how we build Ragdoll rigs and shots.

Rigging Perspective

Following the pipeline overview from our supervisor, this is a more focused look at how we, in rigging and tech art, adapted Ragdoll Dynamics at Herne Hill.

Ragdoll isn't just something we tested, we made it work at scale. That meant figuring out how to build it into our rigs, how to make it accessible for animators, and how to handle the realities of production, across different characters, shots, and needs.

Setup Philosophy

The way we think about Ragdoll setups is pretty close to how we'd approach building a Houdini CFX setup: something robust, consistent, and designed to just work across most situations without extra effort. When an animator presses the “simulate” button before submitting to dailies, they shouldn't have to worry about tweaking parameters or redoing anything - the simulation should just land.

That's a big difference from setups built directly by animators. Animator-led Ragdoll setups often focus on flexibility and artistic control, tools that are ergonomic, fun to play with, and easy to tweak. Our focus is more on consistency and automation. We want the simulation to complement the animation without becoming a process in itself.

We're also using more classic Ragdoll setups in parallel, the kind that are art-directed manually using live recordings. Those are used on specific sequences, often as a first pass of animation rather than CFX. They've been especially useful for creature work or characters falling from cliffs or towers. Since those follow the same workflows you'll find in most official Ragdoll tutorials, we won't focus on them here.

Instead, I will focus on Ragdoll, not as an animation tool, but as a first pass of CFX for things like tails, hair, skirts, scarves or ropes, elements we want to move dynamically from the start. So we begin by taking the time to refine those setups once, properly, across several test scenes rather than adjusting them per shot. This means that one-third of the time is spent building the rig in Maya, but two-thirds is spent testing it across multiple animation scenes to find stable settings that work everywhere. It's not glamorous, but it pays off: we get results that are consistent and performant very early in the process.

Building the Ragdoll Rig

Once the philosophy is clear, the build itself follows a pretty structured process. Everything starts in Maya, with the usual suspects: solver, group, markers, constraints. We use in-house tools to generate all of that quickly.

Regarding shapes used, originally, we experimented with high-res shapes extracted from skinning weights for more accurate collisions, but we found that this actually caused a lot of instability in practice. So now, we stick with capsules, spheres and boxes.

Key Insight

"Simpler shapes = more stable sims"

What really takes time isn't building the setup, but testing it.

A good Ragdoll setup isn't one that looks great in one scene, it's one that behaves well in ten. So once the core is in place, we'll open up a range of animation shots: idle poses, running cycles, fast moves, subtle gestures. And we refine.

We adjust the settings until we find the values that consistently hold up, focusing on:

Rotate StiffnessTranslate StiffnessMassLimit RangeShape Type(Box vs. Capsule vs. Mesh)

That's where presets come in.

A setup that looks great for a static pose might fall apart on a running character. So we started building simulation presets - stable combinations of Ragdoll settings grouped by movement type. These presets can then be applied to the solver or to specific rdGroups, depending on what the shot needs.

And since we often have crowd shots with 10+ characters, managing all these solvers manually became a real pain. Animators would get lost trying to tweak settings across rigs, it wasn't scalable. That's what pushed us to develop a centralized tool.

Custom Tooling

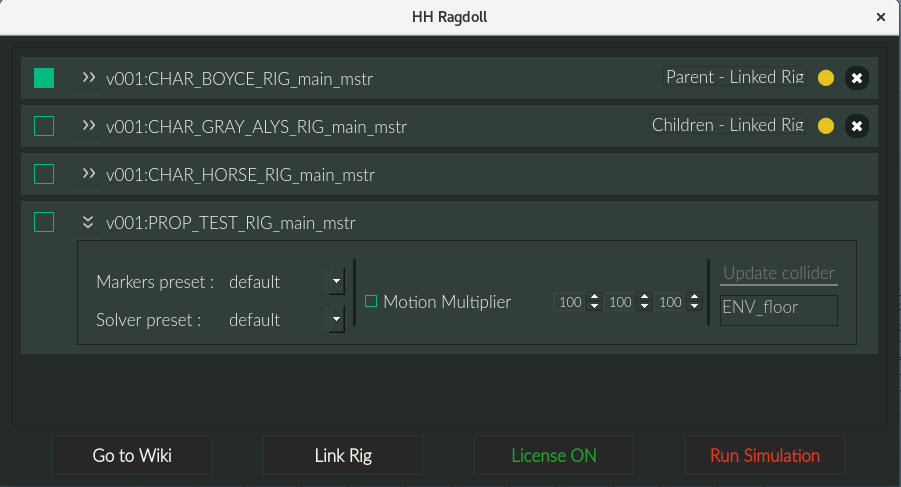

We built a custom UI to handle the growing complexity.

It started simple.

- List all Ragdoll solvers in a scene

- Let the animator select which one to simulate

- Offer the available presets per solver through a dropdown

That alone was a game changer. Suddenly, crowd shots with 15 characters became manageable. You didn't need to dive into each rig to tweak things, you had everything in one place.

But we didn't stop there.

We wanted animators to stay in one window for everything Ragdoll-related. So we added:

- The ability to merge solvers (so different characters could interact)

- The option to unmerge them when needed

- A way to assign environment geometry to a solver (collision walls, floors)

- A preset editor

Most of these are features that already exist in the Ragdoll menu.

We didn't re-invent anything, we just made the essential ones more accessible, especially when working with multiple rigs. Instead of relying on viewport or outliner selections, everything is centralized and filtered to what animators actually need to touch.

This editor lets animators modify solver and rdGroup attributes directly from the UI. Imagine the equivalent of a channel box for every solver and marker group in the scene, all side by side. That's not a big deal when working on a single character, but in a shot with 15 referenced rigs, it changes everything.

Motion Multiplier + Wind

Some setups don't hold when the character moves too much through the world, so travel creates instability.

That's when we introduced the motion multiplier.

It's a concept we often use in Houdini CFX: before running a simulation, we reduce the character's world-space motion, then restore it after the simulation. So a character running across the screen with a 0.5 multiplier will still run the same way but only travel half as far during the simulation. With a multiplier of 0, he'll run in place.

| Motion multiplier 100% | Motion multiplier 50% |

|---|---|

By decoupling world motion from local dynamics, we gain massive stability. But of course, we didn't want to lose the feeling of movement, that sensation of wind, of speed.

So we added a wind field. Its direction always opposite to the character's movement. That way, even when we neutralize motion for the sake of simulation, we keep the physical cues of movement alive. A character doesn't move, but their skirt flaps in the wind. It sounds like a trick, but it feels real.

| Wind Field Enabled | Wind Field Disabled |

|---|---|

Production Changes

Integrating Ragdoll gave us something we hadn't seen in animation before - representative dynamics.

From the first animation version, right after the blocking stage, we could preview tails, chains, or cloth reacting to movement. It brought motion into the process early, and that alone helped improve both reviews and decision-making.

That said, rolling it out at scale wasn't perfect. Ragdoll is a new tool for many in the team, and while some curious and adventurous animators dove in, explored presets, and learned how to push the limits, others had very limited time, sometimes just a day, to pick it up. With our integration and the way Ragdoll fits into our workflow, many of the online tutorials became less applicable or harder to translate into our context.

As a result, many animators used the tool as a black box, press simulate, and get something moving. But when a supervisor note came in about dynamics rather than timing or posing, they sometimes struggled to respond. Without understanding what's under the hood, adjusting the simulation became frustrating. We often had to open the animation scenes ourselves, as rigging and CFX artists, to tweak the setup and meet the note. Once we stepped in, the shot would often get mentally tagged as “rigging's responsibility”, meaning we were expected to handle all future Ragdoll sims on it. And that's counterproductive, Ragdoll is meant to give animators more freedom, not signal that anything complex has to go back to rigging/CFX.

Beyond the workflow, there are technical limitations. Compared to a detailed Vellum simulation, Ragdoll lacks the resolution to fully reshape high-detail cloth meshes. For example, robes or skirts with sculpted wrinkles hold folds rigidly throughout the simulation.

That raises questions about modeling intent.

"Should assets be sculpted for final CFX later, or neutralized for Ragdoll now?"

For rigid props, ropes, or tails, Ragdoll has been a solid win. It's quick, stable, and usually good enough for a final version without any handoff to Houdini.

Next Steps

Ragdoll isn't a replacement for Vellum or traditional cloth sims, but it's become an important tool in our pipeline. Used right, it helps us move faster, visualize earlier, and reduce the number of shots needing full CFX down the line.

But it's still a tool that requires ownership. Without a proper understanding, it can create confusion. So we're working on bridging that gap, refining the tools, improving accessibility, and clarifying when and how to use Ragdoll effectively.

Our goal stays the same: reliable dynamics early in the process, without compromising final quality or artist clarity. Most shots will still need Vellum. Some notes will still require rigging tweaks. But we now have a dynamic layer we can trust for a big part of production and that's already a big shift.

A note from Marcus, founder of Ragdoll Dynamics

Thank you so much Dominik and everyone at Herne Hill for sharing your process with us! The results speak for themselves and we hope to see more from you in the future!